Perception Isn’t Reality: What Research Says About Using Smart Replies

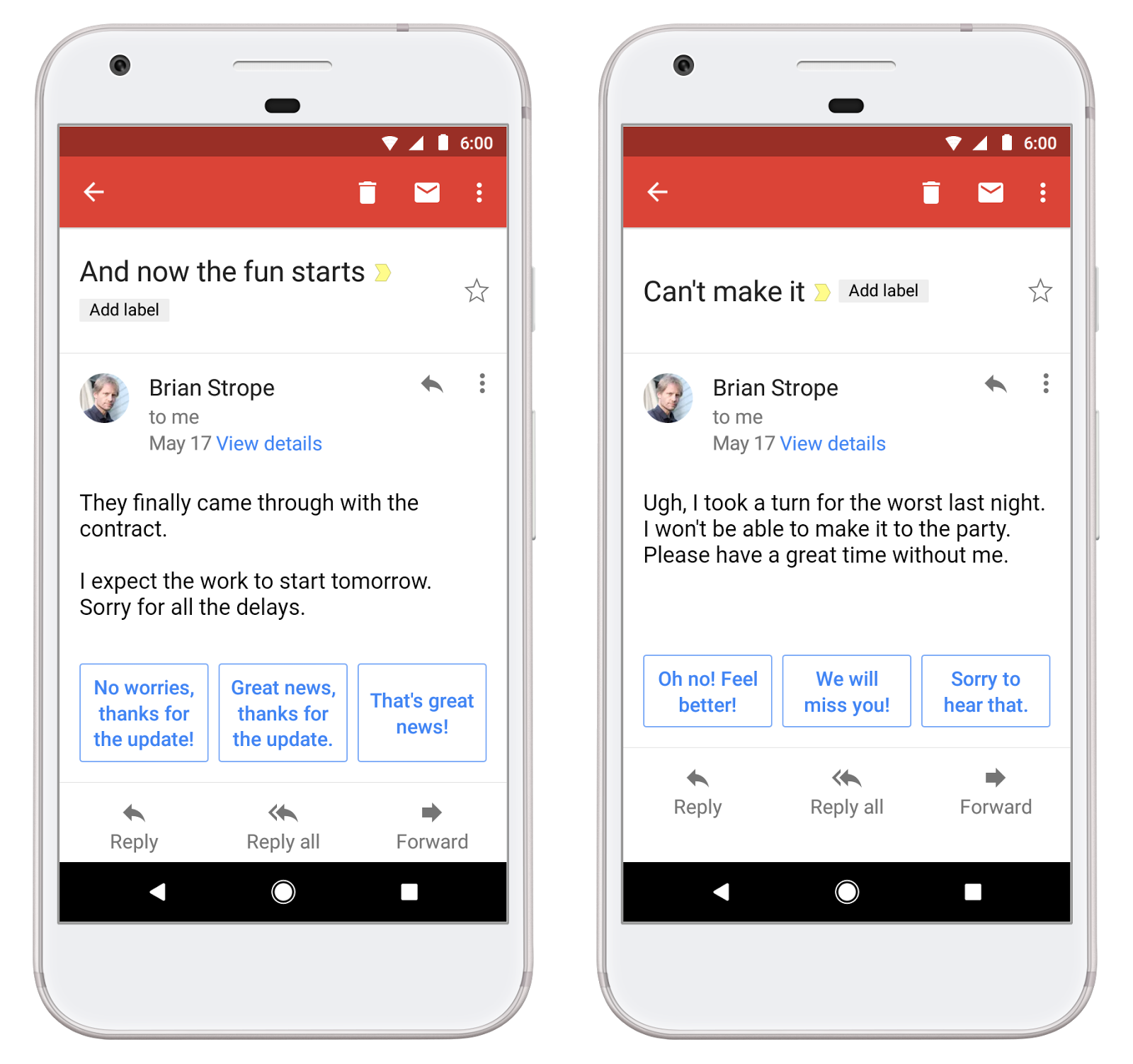

With all the buzz around generative AI, it’s easy to forget that this technology has been around for a while. One of the first places I noticed it was in Gmail. I remember the first time I started to draft a reply and Google suggested some potential responses to me. These are known as “smart replies.” The phrases were short and emphatic: “Sounds good!” “Thank you!” “Not right now.”

None of these generated and recommended responses fit what I actually wanted to say. So, I ignored them. In fact, I’ve mostly ignored them since they showed up in Gmail. However, I recently learned this isn’t true for everyone.

According to a 2023 scientific report in Nature that discusses some interesting research findings about smart replies, “As of 2017, algorithmic response constituted 12% of all messages sent through Gmail, representing about 6.7 billion emails written by AI on our behalf each day.” It’s reasonable to assume this percentage has grown in the past 5 years.

The report, written by nine authors across academic disciplines, argues that there has been significant under-investment in the social impact of generative AI like these smart replies. So, they initiated a research study to learn what effect it has on senders and receivers of messages. They created a novel messaging app that integrated Google’s Reply API (to generate the smart replies). They recruited 219 pairs of participants to exchange messages. Both sides (sender and receiver) were blind to the smart reply availability and whether it was used.

Key Insights

The results were fascinating. Merely showing the smart replies increased their use. If it was available, it tended to be used. Moreover, even when it wasn’t used, it influenced the type of response the receiver drafted. And, perhaps not surprisingly, use of the smart replies also led to increased speed of communication overall.

But, most interesting of all, there was a disconnect between emotional sentiment when the receiver suspected the sender used a smart reply versus when the receiver actually used a smart reply.

If receivers perceived that senders were using the smart replies, they viewed them as less cooperative and more dominant in the conversation (not good). However, when senders actually used the smart replies, receivers viewed their partner with more positive sentiment. Remember, the partners weren’t aware when the other person was prompted with a smart reply or if they used it.

“This shows,” they write, “that people who appear to be using smart replies in conversation pay an interpersonal toll, even if they are not actually using smart replies.” When smart reply use increased and the receiver did not suspect it, ratings of the partner’s cooperation and sense of affiliation increased. Perhaps this is one of the benefits of the lack of transparency in these systems. Humans are predisposed to trusting other humans over computers, as previous studies have shown.

The study shows with evidence that using AI can shape language and perceptions of others. I’d argue that we’re just at the beginning of a new era where generative AI will create more of the content and communication we consume. As the authors state, “We have little insight in how regularly people are allowing AI to help them communicate or the potential long-term implications of the interference of AI in human communication.”

Read more about this study and its conclusions.

Until next time,

Andy